如何理解SiamRPN++?

如何理解SiamRPN++?

在進入主題以前,你須要理解目標跟蹤、孿生網絡結構。ios

目標跟蹤:

- 使用視頻序列第一幀的圖像(包括bounding box的位置),來找出目標出如今後序幀位置的一種方法。

孿生網絡結構:

在進入到正式理解SiamRPN++以前,爲了更好的理解這篇論文,咱們須要先了解一下孿生網絡的結構。網絡

- 孿生網絡是一種度量學習的方法,而度量學習又被稱爲類似度學習。

孿生網絡結構被較早地利用在人臉識別的領域(《Learning a Similarity Metric Discriminatively, with Application to Face Verification》)。其思想是將一個訓練樣本(已知類別)和一個測試樣本(未知類別)輸入到兩個CNN(這兩個CNN每每是權值共享的)中,從而得到兩個特徵向量,而後經過計算這兩個特徵向量的的類似度,類似度越高代表其越多是同一個類別。在上面這篇論文中,衡量這種類似度的方法是L1距離。app

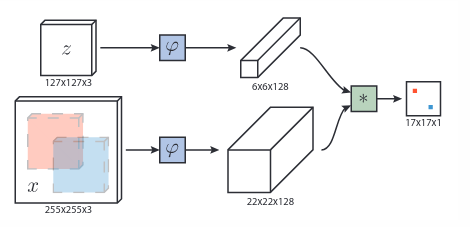

在目標領域中,最先利用這種思想的是SiamFC,其網絡結構如上圖。輸入的

z是第一幀ROI(也就是在手動在第一幀選擇的bounding box),x則是後一幀的圖片。二者分別經過兩個CNN,獲得兩張特徵圖。再經過一次卷積操做(L=(22-6)/1+1=17),得到最後形狀爲17*17*1的特徵圖。在特徵圖上響應值越高的位置,表明其越可能有目標存在。其想法就相似於對上面的人臉識別孿生網絡添加了一次卷積操做。得到特徵圖後,能夠得到相應的損失函數。y={-1,+1}爲真實標籤,v是特徵圖相應位置的值。下列第一個式子是特徵圖上每個點的loss,第二個式子是對第一個式子做一個平均,第三個式子就是優化目標。less

-

However, both Siamese-FC and CFNet are lack of boundingbox regression and need to do multi-scale test which makesit less elegant. The main drawback of these real-time track-ers is their unsatisfying accuracy and robustness comparedto state-of-the-art correlation filter approaches.可是,Siamese-FC和CFNet都沒有邊界框迴歸,所以須要進行多尺度測試,這使得它不太美觀。這些實時跟蹤器的主要缺點是,與最新的相關濾波器方法相比,它們的精度和魯棒性不使人滿意。ide

- 爲了解決SiamFC的這兩個問題,就有了SiamRPN。能夠看到前半部分Siamese Network部分和SiamFC如出一轍(通道數有變化)。區別就在於後面的RPN網絡部分。特徵圖會經過一個卷積層進入到兩個分支中,在Classification Branch中的

4*4*(2k*256)特徵圖等價於有2k個4*4*256形狀的卷積核,對20*20*256做卷積操做,因而能夠得到17*17*2k的特徵圖,Regression Branch操做同理。而RPN網絡進行的是多任務的學習。17*17*2k作的是區分目標和背景,其會被分爲k個groups,每一個group會有一層正例,一層負例。最後會用softmax + cross-entropy loss進行損失計算。17*17*4k一樣會被分爲k groups,每一個group有四層,分別預測dx,dy,dw,dh。而k的值即爲生成的anchors的數目。而關於anchor box的運行機制,能夠看下方第二張圖(來自SSD)。

SiamRPN++

讓咱們正式進入主題:函數

Abstract & Introduction

However, Siamese track-ers still have an accuracy gap compared with state-of-the-art algorithms and they cannot take advantage of features from deep networks, such as ResNet-50 or deeper.(以往的siamese網絡沒法處理較深的網絡)學習

We observe that all these trackers have built their network upon architecture similar to AlexNet [23] and tried several times to train a Siamese tracker with more sophisticated architecture like ResNet[14] yet with no performance gain. (用的都是AlexNet,而在ResNet上效果不佳)測試

Since the target may appear at anyposition in the search region, the learned feature representation for the target template should stay spatial invariant,and we further theoretically find that, among modern deep architectures, only the zero-padding variant of AlexNet satisfies this spatial invariance restriction.(只有AlexNet沒有padding層才知足spatial invariance平移不變性)優化

- 因爲padding層會破壞平移不變性,而越深的網絡像ResNet-50等具有大量padding(這裏簡要解釋一下,卷積層中的參數有stride、padding等。對於stride,只有在目標位移量(shift)是stride的整數倍時,才知足平移不變性。對於padding,同一目標在圖片邊緣和在圖片內部的響應值不一樣)。所以須要解決平移不變性問題。因而做者提出了一種打破平移不變性限制的採樣策略。

By analyzing the Siamese network structure for cross-correlations, we find that its two network branches are highly imbalanced in terms of parameter number; thereforewe further propose a depth-wise separable correlation struc-ture which not only greatly reduces the parameter numberin the target template branch, but also stabilizes the trainingprocedure of the whole model. (分類分支和迴歸分支參數量嚴重不平衡)ui

- 這一點從SiamRPN的結構圖中便可看出。

Siamese Tracking with Very Deep Networks

- 對於原來siamese網絡的分析:原先的孿生跟蹤網絡能夠視爲如下的式子:

\[ f(z,x)=\sigma(z)*\sigma(x)+b \]

而這種設計,會致使兩個限制:

The contracting part and the feature extractor used inSiamese trackers have an intrinsic restriction forstricttranslation invariance,f(z,x[4τj]) =f(z,x)[4τj],where[4τj]is the translation shift sub window opera-tor, which ensures the efficient training and inference.(只用某個ROI的區域做爲x進行運算應該和整體進行運算後取該ROI的結果一致)

The contracting part has an intrinsic restriction forstructure symmetry,i.e.f(z,x′) =f(x′,z), which isappropriate for the similarity learning.(即根據類似性度量的思想,用z做爲卷積核和用x的目標位置做爲卷積核效果一致)

前者也就是嚴格平移不變性限制,後者就是目標類似限制。

Spatial Aware Sampling Strategy

- 若是特徵提取網絡不具備良好的平移不變性的時候(也就是採用ResNet或者MobileNet等擁有padding層的網絡),此時再按照SiamFC的訓練方式,即將全部正樣本都放在中心,那麼此時會學習到位置偏見,也就是對在中心的目標響應值最大。測試方式爲,使用三種目標位置按照不一樣均勻分佈的數據集進行訓練,shift值越大表示均勻分佈的範圍越大,可視化結果以下圖:

- 而這一設置均勻分佈不一樣偏移量的策略被稱爲

spatial aware sampling strategy。做者爲了肯定這一策略的有效性,又在VOT2016和VOT2018上作了測試,發現隨着shift範圍的變大,EAO指標(簡單來講就是視頻每一幀的跟蹤精度a的均值,而a使用的是lOU)整體呈現上升趨勢。在這些測試中,64 pixels是一個良好的偏移量。

- 而一旦消除了深層網絡對中心位置的學習偏見,就能夠根據須要利用任何結構的網絡以進行視覺跟蹤。

SiamRPN對ResNet的transfer

既然解決了位置偏見問題,就能夠上手使用ResNet網絡了。做者在這裏主要是對ResNet-50結構進行修改。ResNet-50結構以下:

其中CONV BLOCK(輸入輸出維度不一樣,shortcut用1*1 kernel改變維度):

ID BLOCK(輸入輸出尺寸一致,便於加深網絡):

The original ResNet has a large stride of 32 pixels,which is not suitable for dense Siamese network prediction.As shown in Fig.3, we reduce the effective strides at the last two block from 16 pixels and 32 pixels to 8 pixels by modifying the conv4 and conv5 block to have unit spatial stride, and also increase its receptive field by dilated convo-lutions [27]. An extra 1×1 convolution layer is appended to each of block outputs to reduce the channel to 256.

Reset常見的stride值爲32,但對於跟蹤任務,先後幀物體差距可能很小,所以做者將最後兩個block的stride改爲了8 pixels,並且會加入一個額外的1*1 convolution layer將通道數變爲256。網絡會將stage 3/4/5的結果放入到SiamRPN網絡中進行Layer-wise Aggregation。

Layer-wise Aggregation多層特徵融合

In the previous works which only use shallow networkslike AlexNet, multi-level features cannot provide very dif-ferent representations. However, different layers in ResNetare much more meaningful considering that the receptivefield varies a lot. Features from earlier layers will mainlyfocus on low level information such as color, shape, are es-sential for localization, while lacking of semantic informa-tion; Features from latter layers have rich semantic informa-tion that can be beneficial during some challenge scenarioslike motion blur, huge deformation.The use of this rich hierarchical information is hypothesized to help tracking.

對於ResNet這種很深的網絡,不一樣stage所得到的特徵也不一樣。淺層stage得到的是Low-level圖像特徵,而較深的block則偏向於獲取語義信息。所以,使用好不一樣層次的特徵有助於跟蹤任務。

Since the output sizes of the three RPN modules have thesame spatial resolution, weighted sum is adopted directly onthe RPN output. A weighted-fusion layer combines all theoutputs.

上面已說,網絡會提取後三個block的結果放入SiamRPN網絡,而這三個RPN模塊的輸出結果形狀是相同的,因此做者這裏採用的是直接加權求和各個結果:

Depthwise Cross Correlation

在Siamese網絡中,後面衡量類似度的是十分重要的。如SiamFC的方式就是上圖(a)的方式,來預測一個單通道的Response Map。而SiamRPN使用的是上圖(b)的方式,因爲引入了anchor,經過多個獨立模板層對一個檢測層的卷積操做來得到多通道獨立的張量。而這就致使了RPN層的參數量要遠大於特徵提取層。所以引入了本文的方法——DW-XCorr。

之因此(b)會產生那麼多參數,緣由在於在進入SiamRPN的RPN層時,存在一個用於提高通道數的卷積層。而(c)使用的是DW卷積操做(下面第二張圖),也就是一個channel負責對一個channel進行卷積操做。以下第一張圖,輸入兩個從ResNet得到的特徵層(channel數目是一致的),先一樣分別經過一個卷積層(因爲須要學的任務不一樣,因此卷積層參數不共享),再分別進行DW卷積操做,而後兩分支再分別經過一層不一樣的卷積層改變通道數以得到想要的分類和迴歸結果。這樣一來,就能夠減小計算量,且使得兩個分支更加平衡。

而經過這種方式,在不一樣的通道對其相應的類會有不錯的響應,而其餘類則會有所抑制,也就是這種方式可以記住一些不一樣類別的語義信息。而(2)的up-channel方式卻沒有這種性質。

實驗結果

在成功識別率,SiamRPN++位於第一位,在精度(識別框中心位置)方面,位於第三。

EAO值位於第一,遠超第二名。

對於比較長的視頻序列,SiamRPN++也位居第一的位置。

- 1. SiamRPN++

- 2. SiamRPN++算法詳解

- 3. SiamRPN論文筆記

- 4. SiamRPN++論文筆記

- 5. SiamRPN++閱讀筆記

- 6. 如何理解runtime

- 7. 如何理解SelfAttention

- 8. 如何理解synchronized

- 9. 如何理解RPC

- 10. 如何理解Axis?

- 更多相關文章...

- • XSD 如何使用? - XML Schema 教程

- • 如何僞造ARP響應? - TCP/IP教程

- • Docker 清理命令

- • ☆技術問答集錦(13)Java Instrument原理

-

每一个你不满意的现在,都有一个你没有努力的曾经。

- 1. Appium入門

- 2. Spring WebFlux 源碼分析(2)-Netty 服務器啓動服務流程 --TBD

- 3. wxpython入門第六步(高級組件)

- 4. CentOS7.5安裝SVN和可視化管理工具iF.SVNAdmin

- 5. jedis 3.0.1中JedisPoolConfig對象缺少setMaxIdle、setMaxWaitMillis等方法,問題記錄

- 6. 一步一圖一代碼,一定要讓你真正徹底明白紅黑樹

- 7. 2018-04-12—(重點)源碼角度分析Handler運行原理

- 8. Spring AOP源碼詳細解析

- 9. Spring Cloud(1)

- 10. python簡單爬去油價信息發送到公衆號

- 1. SiamRPN++

- 2. SiamRPN++算法詳解

- 3. SiamRPN論文筆記

- 4. SiamRPN++論文筆記

- 5. SiamRPN++閱讀筆記

- 6. 如何理解runtime

- 7. 如何理解SelfAttention

- 8. 如何理解synchronized

- 9. 如何理解RPC

- 10. 如何理解Axis?