GFS分佈式文件系統集羣(實例!!!)

GFS分佈式文件系統集羣項目

羣集環境

卷類型

| 卷名稱 | 卷類型 | 空間大小 | Brick |

|---|---|---|---|

| dis-volume | 分佈式卷 | 40G | node1(/b1)、node2(/b1) |

| stripe-volume | 條帶卷 | 40G | node1(/c1)、node2(/c1) |

| rep-volume | 複製卷 | 20G | node3(/b1)、node4(/b1) |

| dis-stripe | 分佈式條帶卷 | 40G | node1(/d1)、node2(/d1)、node3(/d1)、node4(/d1) |

| dis-rep | 分佈式複製卷 | 20G | node1(/e1)、node2(/e1)、node3(/e1)、node4(/e1) |

實驗準備

一、爲四臺服務器服務器每臺添加4個磁盤

二、修改服務器的名稱

分別修改成node一、node二、node三、node4node

[root@localhost ~]#hostnamectl set-hostname node1 [root@localhost ~]# su

三、將四臺服務器上的磁盤格式化,並掛載

在這裏咱們使用腳本執行掛載linux

#進入opt目錄

[root@node1 ~]# cd /opt

#磁盤格式化、掛載腳本

[root@node1 opt]# vim a.sh

#! /bin/bash

echo "the disks exist list:"

fdisk -l |grep '磁盤 /dev/sd[a-z]'

echo "=================================================="

PS3="chose which disk you want to create:"

select VAR in `ls /dev/sd*|grep -o 'sd[b-z]'|uniq` quit

do

case $VAR in

sda)

fdisk -l /dev/sda

break ;;

sd[b-z])

#create partitions

echo "n

p

w" | fdisk /dev/$VAR

#make filesystem

mkfs.xfs -i size=512 /dev/${VAR}"1" &> /dev/null

#mount the system

mkdir -p /data/${VAR}"1" &> /dev/null

echo -e "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0\n" >> /etc/fstab

mount -a &> /dev/null

break ;;

quit)

break;;

*)

echo "wrong disk,please check again";;

esac

done

#給於腳本執行權限

[root@node1 opt]# chmod +x a.sh

將腳本經過scp推送到其餘三臺服務器上sql

scp a.sh root@192.168.45.134:/opt scp a.sh root@192.168.45.130:/opt scp a.sh root@192.168.45.136:/opt

在四臺服務器上執行腳本,並完成

這個只是樣本vim

[root@node1 opt]# ./a.sh

the disks exist list:

==================================================

1) sdb

2) sdc

3) sdd

4) sde

5) quit

chose which disk you want to create:1 //選擇要格式化的盤

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x37029e96.

Command (m for help): Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): Partition number (1-4, default 1): First sector (2048-41943039, default 2048): Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-41943039, default 41943039): Using default value 41943039

Partition 1 of type Linux and of size 20 GiB is set

Command (m for help): The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

分別在四個服務器上查看掛載狀況

四、設置hosts文件

在第一臺node1上修改bash

#在文件末尾添加 vim /etc/hosts 192.168.45.133 node1 192.168.45.130 node2 192.168.45.134 node3 192.168.45.136 node4

經過scp將hosts文件推送給其餘服務器和客戶端服務器

#將/etc/hosts文件推送給其餘主機 [root@node1 opt]# scp /etc/hosts root@192.168.45.130:/etc/hosts root@192.168.45.130's password: hosts 100% 242 23.6KB/s 00:00 [root@node1 opt]# scp /etc/hosts root@192.168.45.134:/etc/hosts root@192.168.45.134's password: hosts 100% 242 146.0KB/s 00:00 [root@node1 opt]# scp /etc/hosts root@192.168.45.136:/etc/hosts root@192.168.45.136's password: hosts 100% 242 146.0KB/s 00:00

在其餘服務器上查看推送狀況 分佈式

分佈式

關閉全部服務器和客戶端的防火牆

[root@node1 ~]# systemctl stop firewalld.service [root@node1 ~]# setenforce 0

在客戶端和服務器上搭建yum倉庫

#進入yum文件路徑 [root@node1 ~]# cd /etc/yum.repos.d/ #建立一個空文件夾 [root@node1 yum.repos.d]# mkdir abc #將CentOS-文件所有移到到abc下 [root@node1 yum.repos.d]# mv CentOS-* abc #建立私有yum源 [root@node1 yum.repos.d]# vim GLFS.repo [demo] name=demo baseurl=http://123.56.134.27/demo gpgcheck=0 enable=1 [gfsrepo] name=gfsrepo baseurl=http://123.56.134.27/gfsrepo gpgcheck=0 enable=1 #從新加載yum源 [root@node1 yum.repos.d]# yum list

安裝必要軟件包

[root@node1 yum.repos.d]# yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

在其餘三臺上進行一樣的操做ide

在四臺服務器上啓動glusterd,並設置爲開機自啓動

[root@node1 yum.repos.d]# systemctl start glusterd.service [root@node1 yum.repos.d]# systemctl enable glusterd.service

添加節點信息

[root@node1 yum.repos.d]# gluster peer probe node2 peer probe: success. [root@node1 yum.repos.d]# gluster peer probe node3 peer probe: success. [root@node1 yum.repos.d]# gluster peer probe node4 peer probe: success.

在其餘服務器上查看節點信息測試

[root@node1 yum.repos.d]# gluster peer status

建立分佈式卷

#建立分佈式卷 [root@node1 yum.repos.d]# gluster volume create dis-vol node1:/data/sdb1 node2:/data/sdb1 force #檢查信息 [root@node1 yum.repos.d]# gluster volume info dis-vol #查看分佈式現有卷 [root@node1 yum.repos.d]# gluster volume list #啓動卷 [root@node1 yum.repos.d]# gluster volume start dis-vol

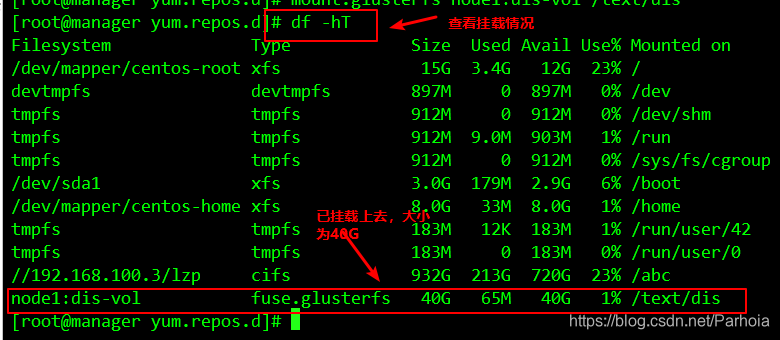

在客戶端上掛載

#遞歸建立掛載點 [root@manager yum.repos.d]# mkdir -p /text/dis #將剛纔建立的卷掛載到剛纔建立的掛載點下 [root@manager yum.repos.d]# mount.glusterfs node1:dis-vol /text/dis ``` ### 建立條帶卷 ```sql #建立卷 [root@node1 yum.repos.d]# gluster volume create stripe-vol stripe 2 node1:/data/sdc1 node2:/data/sdc1 force #查看現有卷 [root@node1 yum.repos.d]# gluster volume list dis-vol stripe-vol #啓動條帶卷 [root@node1 yum.repos.d]# gluster volume start stripe-vol volume start: stripe-vol: success

在客戶端掛載

#建立掛載點 [root@manager yum.repos.d]# mkdir /text/strip #掛載條帶卷 [root@manager yum.repos.d]# mount.glusterfs node1:/stripe-vol /text/strip/

建立複製卷

#建立複製卷 [root@node1 yum.repos.d]# gluster volume create rep-vol replica 2 node3:/data/sdb1 node4:/data/sdb1 force volume create: rep-vol: success: please start the volume to access data #開啓複製卷 [root@node1 yum.repos.d]# gluster volume start rep-vol volume start: rep-vol: success

在客戶機掛礙複製卷ui

[root@manager yum.repos.d]# mkdir /text/rep [root@manager yum.repos.d]# mount.glusterfs node3:rep-vol /text/rep

建立分佈式條帶

#建立分佈式條帶卷 [root@node1 yum.repos.d]# gluster volume create dis-stripe stripe 2 node1:/data/sdd1 node2:/data/sdd1 node3:/data/sdd1 node4:/data/sdd1 force volume create: dis-stripe: success: please start the volume to access data #啓動分佈式條帶卷 [root@node1 yum.repos.d]# gluster volume start dis-stripe volume start: dis-stripe: success

在客戶機上掛載

[root@manager yum.repos.d]# mkdir /text/dis-strip [root@manager yum.repos.d]# mount.glusterfs node4:dis-stripe /text/dis-strip/

建立分佈式複製卷

#建立分佈式複製卷 [root@node2 yum.repos.d]# gluster volume create dis-rep replica 2 node1:/data/sde1 node2:/data/sde1 node3:/data/sde1 node4:/data/sde1 force volume create: dis-rep: success: please start the volume to access data #開啓複製卷 [root@node2 yum.repos.d]# gluster volume start dis-rep volume start: dis-rep: success # 查看現有卷 [root@node2 yum.repos.d]# gluster volume list dis-rep dis-stripe dis-vol rep-vol stripe-vol

在客戶端掛載

[root@manager yum.repos.d]# mkdir /text/dis-rep [root@manager yum.repos.d]# mount.glusterfs node3:dis-rep /text/dis-rep/

------------------------上邊咱們完成了卷的建立和掛載,如今咱們來進行卷的測試

首先在客戶機上建立5個40M的文件

[root@manager yum.repos.d]# dd if=/dev/zero of=/demo1.log bs=1M count=40 40+0 records in 40+0 records out 41943040 bytes (42 MB) copied, 0.0175819 s, 2.4 GB/s [root@manager yum.repos.d]# dd if=/dev/zero of=/demo2.log bs=1M count=40 40+0 records in 40+0 records out 41943040 bytes (42 MB) copied, 0.269746 s, 155 MB/s [root@manager yum.repos.d]# dd if=/dev/zero of=/demo3.log bs=1M count=40 40+0 records in 40+0 records out 41943040 bytes (42 MB) copied, 0.34134 s, 123 MB/s [root@manager yum.repos.d]# dd if=/dev/zero of=/demo4.log bs=1M count=40 40+0 records in 40+0 records out 41943040 bytes (42 MB) copied, 1.55335 s, 27.0 MB/s [root@manager yum.repos.d]# dd if=/dev/zero of=/demo5.log bs=1M count=40 40+0 records in 40+0 records out 41943040 bytes (42 MB) copied, 1.47974 s, 28.3 MB/s

而後複製5個文件到不一樣的捲上

[root@manager yum.repos.d]# cp /demo* /text/dis [root@manager yum.repos.d]# cp /demo* /text/strip [root@manager yum.repos.d]# cp /demo* /text/rep [root@manager yum.repos.d]# cp /demo* /text/dis-strip [root@manager yum.repos.d]# cp /demo* /text/dis-rep

查看卷的內容

查看分佈式卷

查看條帶卷

查看複製卷

查看分佈式條帶卷

查看分佈式複製卷

故障測試

關閉node2服務器觀察結果

[root@manager yum.repos.d]# ls /text/ dis dis-rep dis-strip rep strip [root@manager yum.repos.d]# ls /text/dis demo1.log demo2.log demo3.log demo4.log [root@manager yum.repos.d]# ls /text/dis-rep demo1.log demo2.log demo3.log demo4.log demo5.log [root@manager yum.repos.d]# ls /text/dis-strip/ demo5.log [root@manager yum.repos.d]# ls /text/rep/ demo1.log demo2.log demo3.log demo4.log demo5.log [root@manager yum.repos.d]# ls /text/strip/ [root@manager yum.repos.d]#

結論:

- 分佈卷缺乏demo5.log文件 - 條帶卷沒法訪問 - 複製卷正常訪問 - 分佈式條帶卷缺乏文件 - 分佈式複製卷正常訪問

刪除卷

要刪除卷鬚要先中止卷,在刪除卷的時候,卷組必須處於開啓狀態

#中止卷 [root@manager yum.repos.d]# gluster volume delete dis-vol #刪除卷 [root@manager yum.repos.d]# gluster volume delete dis-vol

訪問控制

#僅拒絕 [root@manager yum.repos.d]# gluster volume set dis-vol auth.reject 192.168.45.133 #僅容許 [root@manager yum.repos.d]# gluster volume set dis-vol auth.allow 192.168.45.133

謝謝閱讀!!!

相關文章

- 1. GFS分佈式文件系統集羣(實踐篇)

- 2. GFS分佈式文件系統集羣(理論篇)

- 3. GFS分佈式文件系統集羣(理論)

- 4. KVM+GFS分佈式文件系統高可用羣集

- 5. CentOS7.4-GFS分佈式文件系統集羣部署

- 6. 分佈式文件系統--GFS

- 7. GlusterFs分佈式文件系統羣集

- 8. GFS分佈式文件存儲系統(實戰!!!)

- 9. MFS分佈式文件系統(實例!!!)

- 10. FasterDFS分佈式文件系統(實例!!!)

- 更多相關文章...

- • C# Windows 文件系統的操作 - C#教程

- • Swarm 集羣管理 - Docker教程

- • Docker容器實戰(七) - 容器眼光下的文件系統

- • 再有人問你分佈式事務,把這篇扔給他

相關標籤/搜索

每日一句

-

每一个你不满意的现在,都有一个你没有努力的曾经。

歡迎關注本站公眾號,獲取更多信息